|

|

Message boards : Number crunching : Wishlist: Add CUDA support

Message board moderation

| Author | Message |

|---|---|

old_user171805 old_user171805Send message Joined: 6 Mar 06 Posts: 2 Credit: 25,066 RAC: 0 |

Add CUDA support; you would get awfully more computing power.

|

|

Send message Joined: 19 Apr 08 Posts: 179 Credit: 4,306,992 RAC: 0 |

Add CUDA support; you would get awfully more computing power. Hello Henri, Applications that benefit from CUDA can be threaded, or broken into parts: A+B=C D*E=F H/I=J (we can get J immediately without needing C or F) Whereas calculations in climate modeling are highly interdependent (i.e. the temperature in Minnesota depends highly on air masses traveling through South Dakota): A+B=C, C*E=F, F/I=J (we can\'t get J without first calculating C.) An inverse corallary to your question is why can\'t Folding@Home model the entire metabolism of a living cell? Answer: they\'d have to code for a small number of threads running on a general-purpose processor, abandoning CUDA. Future hardware and software may bring break-throughs. But keep in mind logs and coal are still transported by trains, even though we\'ve had planes for a hundred years. |

|

Send message Joined: 19 Apr 08 Posts: 179 Credit: 4,306,992 RAC: 0 |

Hmm.... Seems like my command of the alphabet has diminished somewhat. |

|

Send message Joined: 16 Dec 05 Posts: 6 Credit: 28,335,955 RAC: 0 |

Applications that aren\'t embarassingly parallel can still be sped up quite nicely by CUDA. For example, 3D incompressible Navier-Stokes flow solvers regularly achieve on the order of 10x improvement over high end CPUs, and they have multiple dependent steps. Even on GPU clusters where there is internode communication between each step, one can get impressive results (>100x speedup over threaded CPU code). I suspect a much larger issue is the sheer code size. A simple flow solver might be 4-10 thousand lines with maybe 2k lines of CUDA. There are quite a few reference benchmarks to test their quality. In contrast, the CPDN code is 1 million lines of Fortran (Christensen, Aina, Stainforth 2005) and the results have gone through extensive and very lengthy testing. I doubt all 1M lines are core code, but it\'s pretty clear there is a lot going on. One of the things we found, and is pretty well supported in the literature for flow solvers, is that porting individual kernels to the GPU gets you a relatively small overall speedup -- nothing in the 10x range. Besides the obvious Amdahl\'s Law bit (making 1% of your code infinitely fast results in a very small overall application improvement), the main problem is copying the data back and forth to the GPU. It turns out that\'s a major time sink. So while yes, one could take the small and compute intensive pressure Poisson solver and make it run at 100+ GFLOPS which is much faster than the CPU, it ends up not speeding up your entire app much. A few more kernels and you might hit 2-3x depending on the hot spots in the application. The big gain comes when you get all the data on the GPU and can stop the memory traffic. That means putting pretty much all your core application in CUDA. Assume that\'s all possible -- that the core computation is more in the realm of 10-100k lines of code and is all ported over (getting CUDA to run isn\'t too hard, but getting it to run fast is a fair bit of work). Now there is a huge validation task. It doesn\'t matter how fast it is if it isn\'t correct, after all. Unfortunately this is a pretty daunting job. Anyway, I\'d love to see CPDN in CUDA also, but there are some good reasons why it\'s not happening. I suspect we\'ll have more luck waiting for the next generation of models to come out of a research group, with data-parallel operation written from the start, and some grad students doing the grunt work of validating them. Caveats being that I do CFD in CUDA but don\'t do climate modeling nor do I have any additional insight into CPDN other than the public papers and posts. |

|

Send message Joined: 8 Aug 04 Posts: 69 Credit: 1,561,341 RAC: 0 |

Add CUDA support; you would get awfully more computing power. People with CUDA Graphics are already gathering around Milyway@home and SETI@home where CUDA equipped computers rank up 10 fold the workload of a standard CPU based cruncher. A lot of people do not have CUDA graphics, for one reason or another. Some of those, me included, are remaining here at climateprediction, and I would prefer that the crew concentrates on refining the science, rather than trying to \"specialize\" the software for a specific graphics card. Porting millions of lines of code from a Super Computer (made for the very few, MET Office for one) to a PC Based cruncher, that I can actually afford, seems to be a huge task in itself. If the staff\'s really got some spare time on their hands, I would prefer they tried to make my computer do more for them, read optimize for AMD or port to 64 bit code f.eks. Your crunchers seems to be happy elsewhere, anyway. (No bad feelings) All the best and happy crunching. ChisD. |

Byron Leigh Hatch @ team Carl ... Byron Leigh Hatch @ team Carl ...Send message Joined: 17 Aug 04 Posts: 289 Credit: 44,103,664 RAC: 0 |

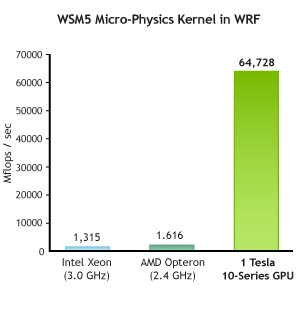

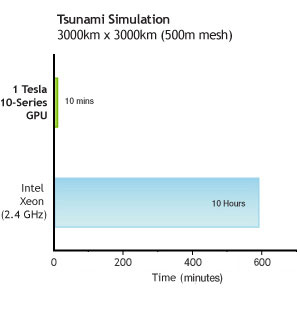

I\'m not a scientist, programmer or even that well educated in science but I do love Mathematics, Science, Computers and learning with that in mind I wonder if the following web page by NVIDIA be of any interest to you Scientists involved in Cimate Prediction, Weather, Atmospheric, Ocean Modeling or even programmers involved in parallel programming for CUDA and the new Tesla M2050 ... which I think is available 1 st of September 2010 ? Weather and Climate prediction ... two different thing I know :) http://www.nvidia.com/object/weather.html http://www.nvidia.com/object/weather.html any way I\'m like Henri ... someday I sure would to see CUDA support for Climate prediction.net Best Wishes Byron PS Several weather and ocean modeling applications such as WRF (Weather Research and Forecasting model) and Tsunami simulations are achieving tremendous speedups that enable savings in time and improvements in accuracy ... using CUDA and GPU ... Weather Modeling Software on CUDA  _ _  |

|

Send message Joined: 12 Feb 08 Posts: 66 Credit: 4,877,652 RAC: 0 |

The text under the graph on the Nvidia marketing page you linked to reveals that even though the speed of the micro-physics kernel part increased significantly the overall program performance increased by only 25%. In addition, by going to the WRF page you can see that they are comparing a single-threaded CPU app to a multi-threaded GPU app. Also google Amdahl\'s law. |

soft spirit soft spiritSend message Joined: 23 Apr 10 Posts: 3 Credit: 803,617 RAC: 0 |

The text under the graph on the Nvidia marketing page you linked to reveals that even though the speed of the micro-physics kernel part increased significantly the overall program performance increased by only 25%. In addition, by going to the WRF page you can see that they are comparing a single-threaded CPU app to a multi-threaded GPU app. From my experiences at SETI, The same units I was processing on an old CPU(amd core 2, moderate speed, about 2 years old) compared to similar unit transfered to a moderate graphics card(nvidia 9600gt) increased the output of said machine by several times over. Both the CPU and GPU can perform tasks at the same time, with even similar speeds giving a 2-3x inherent increase. The real boost comes in not running just 2 at a time in a dual core, but instead running the entire parallel graphic cores.. 16-250+ the performance due to the number of cores. The performance gains IF the GPU can be put to this project(sorry I have to defer to the programmers for the possibility/difficulty portion of the discussion) are potentially IMMENSE. That said.. Be really careful of new code roll out. Crashing the project to do it... not good. Very bad. Not good not good. |

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

There's no need to worry about new, untested code, because climate models aren't suited to the current types of gpu. (Not enough resolution.) And the cpu code for new types of models is tested for months on our beta site before release. |

|

Send message Joined: 20 Feb 06 Posts: 158 Credit: 1,251,176 RAC: 0 |

I am pleased to hear that CUDA is not suitable for CPDN. I have just ceased SETI, which I started in May 1999 because (as it seems to me) there are repeated outages which I attribute mainly to the implementation of CUDA. It encouraged clients to cache literally thousands of tasks, leaving other normal tasks in the work units to delay validation by months with consequent astronomically high Pending tasks, completely making RAC meaningless. My advice from experience --- LEAVE WELL ALONE I very much regret ceasing SETI, but cannot stand it any longer. Keith |

mo.v mo.vSend message Joined: 29 Sep 04 Posts: 2363 Credit: 14,611,758 RAC: 0 |

We're lucky at CPDN that although there are only two programmers, Tolu and Milo, they make tremendous efforts to keep the servers running, maintain sufficient storage space for completed work, and supply constant work for as many types of computer as they can. Not always easy and they are very inventive. I must say, though, that I have a good deal of sympathy with the Seti programmers. Seti is often used as a testbed for new Boinc features and computer capabilities. Other projects often benefit later from what Seti tries out. Cpdn news |

tullio tullioSend message Joined: 6 Aug 04 Posts: 264 Credit: 965,476 RAC: 0 |

People using SETI build home supercomputers by loading graphic boards, mostly nVidia, on their systems using CUDA and complain when things do not work. But the drivers of the graphics boards change continuously and the SETI programmers are overloaded by trying to keep up with the changes. I do not use any graphic board and am running both SETI@home and Astropulse (a different application) on my Linux box with no problem at all. A SETI cruncher is proposing a boycott of SETI since he is not satisfied with its speed. People spend thousand of dollars on graphic boards such as Tesla and Fermi and think they have a right to new versions of SETI apps. This is absurd. Tullio |

©2024 cpdn.org